Runway AI has unveiled Aleph, a revolutionary video editing tool that changes existing footage through simple text commands. The tool lets users add or remove objects, adjust lighting, and modify people’s appearances to make them look older or younger. Users can create professional video effects without complex VFX processes.

The system keeps frame consistency while providing smooth editing features. The technology even creates new camera angles from existing video footage. The runway ai video generation technology has already found its way into major studios, ad agencies, architecture firms, gaming companies, and eCommerce teams. This launch marks the start of Runway’s video editing innovations, and we can expect more advances soon.

1. Runway AI launches Aleph to redefine video editing

Image Source: No Film School

Runway AI launched Aleph on July 25, 2025. This revolutionary AI video model changes how creators edit and manipulate video content. Traditional tools focused on generating videos from text, but Aleph features a conversational interface that lets users make sophisticated edits through simple text prompts.

The company built Aleph on its research into General World Models and Simulation Models. Runway describes it as “a state-of-the-art in-context video model, setting a new frontier for multi-task visual generation”. Users can now complete complex edits in seconds that would take hours with traditional editing software.

Aleph differs from Runway’s Gen-1 and Gen-2 models by focusing on what they call “fluid editing.” The system enables users to transform existing content rather than just creating new videos. Users can remove cars from shots, change backgrounds, or restyle entire scenes with simple text instructions.

AI video tools traditionally don’t deal very well with maintaining frame continuity. Aleph solves this with local and global editing features that keep scenes, characters, and environments consistent throughout the footage. Users no longer need to fix the frame-by-frame glitches common in AI-edited videos.

The system offers these powerful features:

- Object manipulation to add, remove, or transform scene elements

- Environmental controls that change lighting, weather, and time of day

- Camera angle generation from existing footage

- Style transfers for different artistic looks

- Character modification without complex makeup or VFX

“Runway Aleph is more than a new model — it’s a new way of thinking about video altogether,” Runway stated in their announcement. Yes, it is a fundamental change in post-production workflows that reduces costs and time for content creators of all types.

Competition in AI video creation has intensified with OpenAI, Google, Microsoft, and Meta showcasing their models this year. Runway AI, which helped popularize AI video with earlier models, positions Aleph ahead by combining high-fidelity generation with real-time, conversational editing.

The system has already gained traction professionally. Runway reports that “major studios, ad agencies, architecture firms, gaming companies and eCommerce teams” are using Aleph. These early adopters are learning how the technology can simplify their production processes and unlock new creative possibilities.

Runway provides early access to enterprise customers and creative partners, with broader availability “rolling out in the coming days”. This staged rollout helps the company refine the technology based on professional user feedback before wider release.

Aleph marks a major step forward in video content manipulation. The system makes sophisticated video editing available to creators at all skill levels by removing the need for complex keyframing, masking, or other technical skills required by traditional software.

2. Aleph enables prompt-based video manipulation

Image Source: TechEBlog –

The runway ai video model Aleph uses a user-friendly prompt-based interface that revolutionizes video content manipulation. This “in-context video model” follows natural language instructions and lets you make complex edits without needing specialized software or technical expertise.

2.1 Users can add, remove, or transform objects

Aleph’s object manipulation capabilities let you modify video elements naturally. You can add new objects that weren’t there before, take out things you don’t want, or change existing elements into something completely different.

The system needs just two simple parts in each prompt:

- An action verb (add, remove, change, replace)

- A description of what you want to change

You could type “add fireworks to this scene” or “remove the man in red”. The runway ai video generator processes these instructions and keeps the footage looking natural throughout.

The system handles complex changes too. A car driving down a road can become a horse and carriage while keeping the original movement. You can also modify people’s appearances to make them look older or younger without editing each frame.

The tool helps you add crowds to empty streets, put products on tables, or insert props you missed during filming. The runway ai video merges these new elements naturally with the right lighting, shadows, and viewpoint.

2.2 Change lighting, weather, and time of day

Aleph gives you exact control over how your footage looks. You can change:

- Lighting conditions and sources

- Weather elements (rain, snow, fog)

- Seasons (summer to winter)

- Time of day (day to night conversions)

The runway video generation system turns a sunny park into a winter scene or makes day look like night. These changes keep the original elements intact while giving the scene a different mood and feel.

Relighting works especially well when you have harsh noon lighting. You can ask to “change the scene to use warm, natural lighting” or “change the lighting so the left side of his face is lit with an orange light”. The runway ai generator adjusts the main lights and updates shadows, reflections, and color temperature in the whole scene.

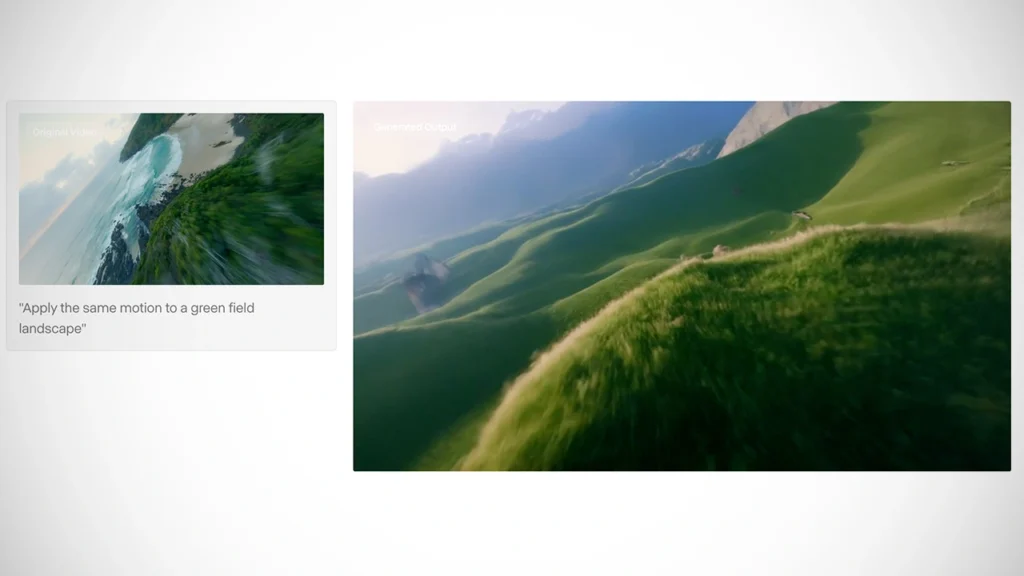

2.3 Generate new camera angles from existing footage

Aleph creates new viewpoints from your existing video, which is its most impressive feature. You get camera angles that weren’t even filmed during production.

Simple prompts like “show me a different angle of this video” or “change the camera to a wide angle that shows the entire person” make the runway ai video generation system analyze what it sees and create new views.

You can:

- Make wide shots from close-ups

- Create reverse angles

- Get over-the-shoulder views

- Turn static footage into tracking shots

The system can also create the next shot in a sequence based on your original video. This helps you fill in missing coverage or try new creative ideas without filming again.

Aleph keeps everything looking consistent across these new viewpoints. It knows how things relate in space and keeps the scene logical even when showing areas that weren’t in the original footage.

These features mark a transformation in video editing. Instead of spending hours changing individual frames in traditional software, you just describe what you want and watch the runway ai make these changes throughout your clip. This cuts down editing time by a lot and opens up creative possibilities that technical limits used to block.

3. How Aleph improves on Gen-1 and Gen-2 models

Image Source: Appy Pie Design

3. How Aleph improves on Gen-1 and Gen-2 models

Runway AI has taken a huge step forward with Aleph. The technology goes far beyond what Gen-1 and Gen-2 models could do. This new video generator brings a fundamental change that lets creators make professional videos using simple text commands.

3.1 From generation to live editing

Earlier runway ai models we used to create videos from scratch. Now Aleph brings a fundamental change toward live, contextual video editing. You can transform your existing footage right away, rather than creating completely new content.

Aleph builds on Runway’s deep research into General World Models and Simulation Models. The breakthrough comes from what Runway calls “unified, contextual processing”. Unlike older AI tools that handled one task at a time, Aleph looks at the whole video context to:

- Process multi-task visual transformations simultaneously

- Apply edits while keeping the original scene intact

- Make complex changes through simple language commands

- Keep visual continuity throughout the edited footage

The runway ai video generation system shows several major advances over older models:

- 3D video understanding that blends different camera angles

- Advanced object tracking that maintains temporal coherence

- Cinematic style interpretation that blends lighting scenarios naturally

- Performance optimization that processes faster than previous models

3.2 Maintaining continuity across frames

Aleph’s most impressive feature solves one of AI video’s toughest challenges: keeping visual consistency across edited frames. Older models often created glitches between frames that needed manual fixes.

The runway ai video generator uses advanced local and global editing features to keep scenes, characters, and environments consistent. When you add or remove objects, change lighting, or alter other elements, the changes stay coherent throughout the clip.

Aleph removes the need to adjust each frame manually. The system smartly adjusts shadows, reflections, and lighting to match the original environment during scene changes. Your edited videos look professional without the obvious AI artifacts seen in older tools.

3.3 Conversational interface for efficient workflows

Runway AI has changed how creators work with video editing tools through Aleph’s conversational interface. You don’t need to learn complex software or technical terms – just tell the runway ai generator what you want.

This natural language approach makes post-production much faster. The runway video generation system understands simple English commands like “add falling snow” or “change the camera to a side angle”. Aleph handles complex technical tasks that usually need:

- Rotoscoping

- Compositing

- 3D rendering

- Camera tracking

- Color grading

The conversational format creates an accessible workflow. The runway ai video works more like a creative partner than just another tool. You can handle complex editing tasks without technical knowledge.

The runway ai video generator combines tasks that used to need separate specialized tools and teams of experts. This matches how traditional editing software has grown, but goes further by removing technical barriers completely.

Aleph shows a move toward production-ready AI systems instead of experimental tools. The browser-based platform removes old barriers like expensive hardware or complex software setups. Advanced video effects become available to many more creators, changing how post-production works.

4. Who is using Aleph and how

Image Source: Runway

Creative professionals from many industries have started using the Runway AI tool Aleph. This advanced video editing system changes how creators work on their projects. Simple text prompts now make complex visual effects available.

4.1 Studios and ad agencies adopt Aleph

Major studios and advertising agencies lead Aleph’s adoption. These creative professionals use the Runway AI video generator to simplify post-production processes that once needed specialized teams and expensive software.

Studios benefit from Aleph in several ways:

- They complete visual effects shots faster

- Routine editing tasks cost less

- Teams can test creative concepts quickly before investing resources

Ad agencies value Runway AI’s video generation features to create different content versions. They can adapt a single commercial for different markets by changing backgrounds, weather, or product placements without new shoots.

You can use AI like Runway Aleph to improve your content. Mehnav helps you build fast websites, create engaging explainer videos, and rank higher with AI-powered SEO that delivers results.

4.2 Use cases in gaming, architecture, and eCommerce

The Runway AI generator serves unique purposes in a variety of industries:

Game developers employ Aleph to create realistic environments and character animations. Some studios have connected Aleph with tokenization systems that track in-game item ownership history. Players can trade assets between games. This creates new revenue streams and keeps players more engaged.

Architects show clients how buildings look under different lights, weather, or seasons. This helps clients understand design proposals better before construction starts.

eCommerce teams found new ways to use the Runway AI video generation tool. A single original clip helps them show products in different settings or demonstrate performance under various conditions.

4.3 Enterprise access and rollout timeline

Runway AI has made Aleph available to Enterprise accounts and Creative Partners. Professional users provide valuable feedback about the system’s capabilities during this original phase.

The company’s rollout timeline includes:

- Enterprise customers and creative partners get first access

- More users join “in the coming days”

- Everyone can access it soon

This step-by-step approach lets Runway AI improve the technology based on actual usage before wider release. Enterprise users often have specific technical needs and advanced video production requirements.

Current enterprise clients receive dedicated support to add Aleph to their existing processes. Organizations can use the technology’s full potential while keeping their production standards high.

The Runway AI video tool works through your browser. Teams don’t need special hardware or complex software installations. Remote teams working from different locations find this especially helpful.

Early enterprise users mostly use Aleph for work they used to send to VFX companies. Organizations now handle sophisticated video production in-house with AI-powered tools.

5. How Aleph compares to other AI video tools

Image Source: CNET

AI video creation is heating up in 2025, and Runway AI’s Aleph stands out with its unique approach. Let’s see how this tool matches up against other big names in the market.

5.1 Runway vs. OpenAI Sora and Google Veo

Runway AI’s video generator Aleph works quite differently from its competitors. OpenAI’s Sora creates realistic videos from text prompts that last up to a minute. Aleph, on the other hand, focuses on editing existing footage. This difference shows their contrasting design philosophies.

Google Veo 3 currently ranks as the “most advanced and realistic AI video generator“. The tool comes with native audio features, ultra-realistic lip-sync, and expressive human-like faces that Aleph hasn’t matched yet.

Each tool brings something special to the table:

- Sora: Creates high-quality cinematic content from text prompts

- Veo: Leads in audio sync and realistic human expressions

- Aleph: Rules the video editing and transformation space

5.2 Strengths in editing vs. generation

Aleph really shines as an editing powerhouse. While other tools create new videos from scratch, Aleph performs “fluid editing” on existing footage. This makes it a valuable asset for post-production work.

When matched against Luma’s Modify Video model, Aleph shows better results in creating new camera angles. Luma does allow longer clips though – up to 10 seconds compared to Aleph’s 5-second limit.

The Runway video tool comes with these key advantages:

- Multi-task visual generation in one model

- Keeps lighting, shadows, and viewpoint consistent in edits

- Better temporal consistency across video frames

- User-friendly interface for complex editing commands

Independent testing shows Aleph does an excellent job “removing people, replacing characters, and adding weather effects”. The tool keeps characters more consistent across shots than its rivals.

5.3 Limitations in resolution and duration

Aleph has some technical limits despite its impressive features. The tool only works with single clips up to 5 seconds long and caps file sizes at 64 MB. Video resolution options stick to specific formats like 720×1280 and 960×960.

Sora outperforms here by generating videos up to 60 seconds long. Users might need several rendering attempts with Aleph to get their desired results because of occasional precision issues with image inputs.

The tool doesn’t deal very well with fast-moving footage, which often turns out blurry. Rendering times lag behind traditional editing tools too.

Professional creators find Aleph works best for specific editing tasks rather than complete video production. Many of them combine Runway AI video generation with traditional editing software to finish and polish their projects.

6. What Aleph means for the future of video creation

Image Source: Magai

Runway AI’s Aleph represents a game-changing moment for video production. This powerful technology transforms how creators make, edit and share video content across industries.

6.1 Democratizing VFX and post-production

Runway AI’s video generation tools tear down the barriers to high-end visual effects. Professional-grade effects used to need specialized expertise and costly software. Now small productions can access Hollywood-quality effects with simple text prompts.

Browser-based tools eliminate the need for expensive hardware and complex setup that usually limit access. This democratization blurs the line between big Hollywood productions and small budget projects across the industry. High-quality visuals are now possible whatever the team size or budget.

6.2 Reducing cost and time for content creators

Runway AI video generator significantly affects production costs. Previously costly post-production work now takes minutes instead of hours. Teams can complete complex VFX tasks faster through simple text commands.

AI speeds up implementation and creative teams can cut costs by up to 20%. Location scouting becomes much cheaper as teams can generate or modify environments later. Teams that implement the Runway AI generator boost their content output by about 30%, which leads to faster production cycles.

6.3 Potential for personalized entertainment

Runway AI video generation creates new possibilities for tailored content experiences. The technology’s flexibility helps create custom content for education and e-commerce advertising.

Creators can quickly make multiple versions of content for different contexts or priorities. Brands can create different versions of ads without extra shoots, which works especially well for social media marketing. E-commerce companies use Runway AI video to display products in various settings at once.

The development roadmap includes better model performance, faster generation and higher video resolution. Runway plans to add more interactive features like live collaborative editing and advanced narrative generation.

Conclusion

Aleph has changed how we create and edit videos. Runway AI’s groundbreaking tool connects professional visual effects with everyday creators. Simple text commands now replace complex editing workflows to create sophisticated transformations.

Runway AI’s emphasis on smooth editing makes Aleph different from its generation-focused rivals. Users can modify existing footage instead of starting fresh, which saves time and resources. The tool’s ability to keep frame continuity fixes a major issue that plagued AI video tools before.

Major studios, advertising agencies, gaming companies, and architecture firms have embraced Aleph, which proves its real-world value. This technology gives everyone access to high-quality visual effects that once needed special skills and costly software.

Runway AI has reshaped video production economics completely. Text instructions now complete in minutes what once took hours of specialized work. Teams can create more content versions for different audiences without extra shoots or higher budgets.

Aleph signals the start of an exciting content creation era. The technology will grow stronger with better resolution, faster processing, and more creative options. Creators now step into a new world where imagination, not technical skills, sets the limits of video production.

Key Takeaways

Runway AI’s Aleph revolutionizes video editing by transforming complex post-production into simple text commands, making professional-grade visual effects accessible to creators of all skill levels.

• Aleph enables conversational video editing – Transform existing footage through natural language prompts like “add rain” or “change lighting to sunset” without technical expertise.

• Maintains visual continuity across frames – Unlike previous AI tools, Aleph preserves consistency in lighting, shadows, and object tracking throughout video transformations.

• Generates new camera angles from existing footage – Create wide shots from close-ups or entirely new perspectives without additional filming or camera setups.

• Major studios and agencies are already adopting it – Enterprise customers across gaming, architecture, and eCommerce are implementing Aleph in production workflows.

• Reduces production costs by up to 20% – Eliminates expensive VFX work and location shoots while boosting content output by approximately 30%.

This breakthrough technology democratizes Hollywood-quality visual effects, transforming video production from a technical skill requirement into a creative conversation with AI. Aleph represents the future where imagination, not expertise, defines what’s possible in video creation.

FAQs

Q1. What is Runway AI’s Aleph and how does it transform video editing? Aleph is Runway AI’s latest video editing tool that allows users to manipulate existing footage through simple text prompts. It enables adding or removing objects, changing lighting and weather, and even generating new camera angles without complex technical skills.

Q2. How does Aleph compare to previous AI video models? Unlike earlier models focused on generating videos from scratch, Aleph specializes in real-time editing of existing footage. It maintains visual continuity across frames and offers a conversational interface for faster, more intuitive workflows.

Q3. Who is currently using Aleph and for what purposes? Major studios, advertising agencies, gaming companies, and architecture firms are already adopting Aleph. It’s being used for rapid prototyping, extending footage, fixing continuity issues, and creating multiple versions of content efficiently.

Q4. What are the current limitations of Aleph? Aleph can only process clips up to 5 seconds long and has a maximum file size of 64 MB. It also has restricted resolution options and its performance can vary based on scene complexity.

Q5. How might Aleph impact the future of video creation? Aleph is democratizing access to high-quality visual effects, potentially reducing production costs by up to 20% and increasing content output by about 30%. It opens up possibilities for more personalized content experiences across various industries.